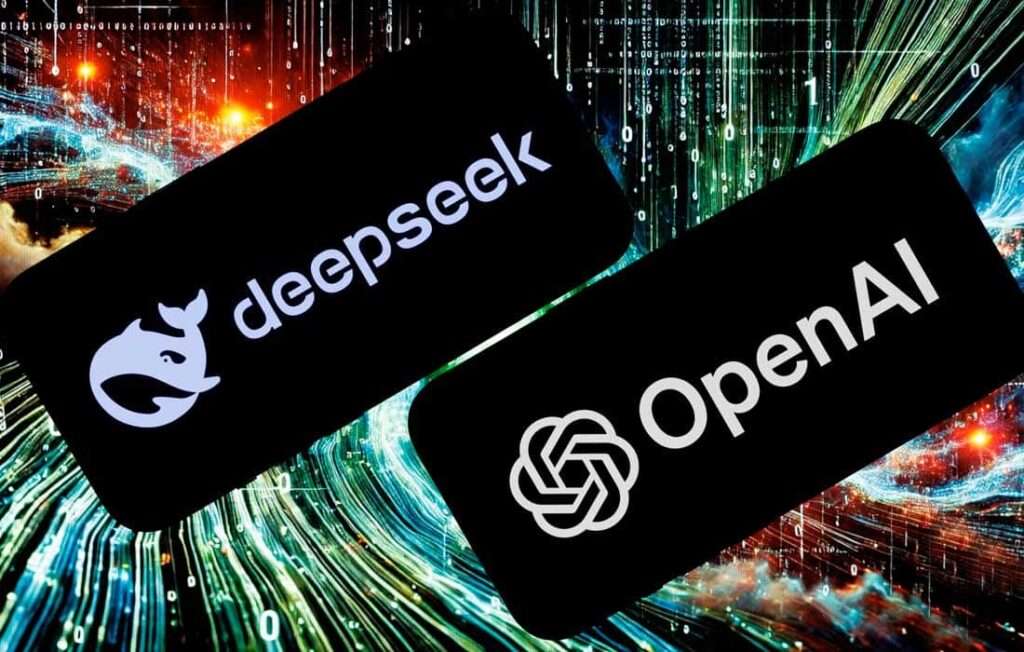

Imagine achieving more while using less – not just a little less, but 95% less. That’s the revolutionary story of DeepSeek R1, a Chinese AI startup rewriting the rules of artificial intelligence development. In January 2025, this relatively unknown company stunned the tech world by creating an AI model that matches or exceeds the performance of industry giants while using just a fraction of their resources.

The numbers tell a compelling story: While tech giants deploy massive arrays of 16,000 NVIDIA H800 GPUs for their models, DeepSeek R1 achieves comparable results with just 2,000 units. This translates to a mere $5.58 million in training costs compared to the $100+ million typically spent by competitors. But here’s the kicker – DeepSeek R1 isn’t just matching these tech giants; in several key reasoning tasks, it’s outperforming them.

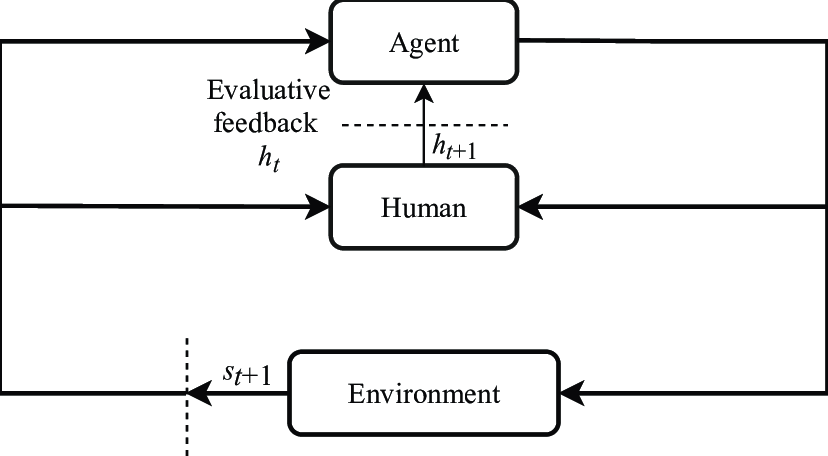

Group Relative Policy Optimization (GRPO) is at the heart of this breakthrough, a revolutionary approach that teaches AI more like humans learn – through intelligent trial and error rather than brute-force memorization. This innovation isn’t just changing how we build AI; it’s democratizing access to advanced artificial intelligence by proving that world-class performance doesn’t require world-class budgets.

As industry experts note, this achievement represents more than just another product launch – a paradigm shift that could reshape the entire AI landscape. It’s a story that proves innovation often comes not from having the most resources but from having the courage to challenge established norms and think differently.

Breaking the Rules: How DeepSeek R1 Challenged AI Conventions

In the bustling financial district of Shanghai, a groundbreaking AI story began not in a garage like many Silicon Valley tales but in the analytical minds of quantitative traders. The year was 2023, and while tech giants were pumping billions into AI development, Liang Wenfeng, a successful hedge fund manager, quietly plotted a different path.

As the co-founder of High-Flyer, a quantitative hedge fund that has been successfully using AI for financial trading since 2013, Liang witnessed firsthand the power and limitations of existing AI systems. His vision challenged the fundamental assumption that throwing more resources at AI was the only way forward – a question that became the founding principle of DeepSeek R1.

Instead of building massive data centers or chasing after the latest high-end GPUs, Liang assembled a team of brilliant young graduates from top Chinese universities. These weren’t just computer scientists – physicists, mathematicians, and philosophers, each bringing a unique perspective to building more intelligent AI. While others focused on brute force methods requiring enormous computing power, DeepSeek embraced efficiency through their novel reinforcement learning technique called Group Relative Policy Optimization (GRPO). Their approach wasn’t just more efficient – it proved more effective at complex reasoning tasks.

The results spoke volumes. Using only 2,000 NVIDIA H800 GPUs – compared to the 16,000 units typically employed by larger competitors – DeepSeek R1 achieved performance levels that matched or exceeded models costing 20 times more to develop. Key metrics tell the story:

- Training Cost: ~$5.58 million (vs. $100+ million industry standard)

- GPU Usage: 2,000 NVIDIA H800s (vs. 16,000 industry standard)

- Development Time: Less than 2 years from concept to breakthrough

- Team Size: Initially, less than 50 core developers

We noted that “DeepSeek hasn’t just built a more efficient AI model – they’ve demonstrated that the future of artificial intelligence might not belong to those with the biggest budgets but to those with the most innovative approaches.”

The Secret Sauce: Inside DeepSeek’s Efficiency Revolution

Think about teaching a child to play chess. Instead of memorizing every possible move from a book, they learn by playing games, understanding patterns, and developing strategies through experience. This is precisely how DeepSeek R1’s revolutionary Group Relative Policy Optimization (GRPO) works – changing everything about how AI learns through reinforcement learning.

When DeepSeek first announced its breakthrough, many Western experts were skeptical. How could a relatively unknown Chinese AI company achieve what tech giants couldn’t? Some suggested they must have used traditional distillation techniques – the practice of using larger models to train smaller ones. But DeepSeek’s approach was far more innovative.

Traditional AI models are like students cramming for an exam, memorizing vast amounts of information without truly understanding the underlying principles. However, DeepSeek’s pure reinforcement learning approach takes a smarter path, developing fundamental reasoning patterns that can be applied across different situations. It’s the difference between memorizing recipes and understanding the principles of cooking.

Their breakthrough extends beyond just GRPO. DeepSeek’s approach to resource management has revolutionized the industry through three key strategies:

- Selective Parameter Activation: The model activates only the most relevant neural pathways for each task, like using a spotlight instead of lighting up an entire room.

- Dynamic Resource Allocation: The system continuously optimizes resource usage, similar to a smart power grid that routes electricity where it’s needed most.

- Intelligent Memory Management: Rather than storing everything, the model retains only the most valuable patterns and principles, much like how human memory works.

The results have stunned even the skeptics, according to recent studies:

- Processing Power: 80% reduction in GPU requirements

- Energy Consumption: 80% lower than traditional models

- Training Time: Significantly shortened due to efficient learning

- Cost Efficiency: 94% reduction in total investment

What’s most remarkable is that DeepSeek R1 achieved this through pure reinforcement learning without relying on the distillation techniques many thought necessary. Benchmark tests have demonstrated superior reasoning capabilities across various domains, from mathematical problem-solving to natural language understanding, proving that sometimes the most effective path is also the most efficient.

David vs Goliath: Benchmarking Against Tech Giants

Numbers tell stories, and DeepSeek R1’s story reads like a modern fairy tale in artificial intelligence. Think of it as a race where one runner decided to take a completely different route. While tech giants were sprinting down the well-worn path of bigger models and more computing power, DeepSeek R1 found a shortcut through the woods – and somehow arrived at the finish line first.

In head-to-head comparisons against OpenAI and other tech giants, DeepSeek R1 didn’t just keep pace – it often surpassed them in key areas:

- Complex Reasoning Tasks: DeepSeek R1 demonstrated superior performance in mathematical problem-solving and logical reasoning, matching or exceeding the capabilities of models that cost 20 times more to develop.

- Language Understanding: The model showed remarkable comprehension abilities across multiple languages, achieving comparable results to larger models while using significantly fewer parameters.

- Coding and Technical Tasks: DeepSeek R1’s efficiency shined through in software development challenges, producing high-quality code with notably faster response times.

The cost comparison tells an equally compelling story:

Training Infrastructure:

- DeepSeek R1: 2,000 NVIDIA H800 GPUs

- Traditional Approach: 16,000+ GPUs

- Cost Savings: Approximately 87.5% reduction in hardware requirements

Development Costs:

- DeepSeek R1: $5.58 million total investment

- Industry Standard: $100+ million

- Efficiency Gain: 94% cost reduction

What DeepSeek has achieved isn’t just about saving money. It’s about proving that innovation and clever engineering can trump brute force and bottomless budgets. They’re not just changing the game – they’re showing us a completely different way to play it.

Beyond the Numbers: Real-World Applications

Numbers and benchmarks are impressive, but the magic happens when technology moves out of the lab and into the real world. This is where DeepSeek R1 truly shines, transforming abstract concepts like “efficient reasoning” and “resource optimization” into solutions that make a difference in people’s lives.

Let’s look at how DeepSeek R1’s reasoning capabilities are reshaping key industries:

Scientific Research:

- Research teams report cutting data analysis time by 60%

- Complex pattern recognition across thousands of papers simultaneously

- Novel approach suggestions that researchers hadn’t considered

Healthcare Innovation:

- Diagnostic assistance through complex medical relationship analysis

- Personalized treatment planning considering multiple factors

- Research synthesis connecting insights across the medical literature

Business Intelligence:

- Market analysis processing vast amounts of data

- Strategic planning through multi-scenario analysis

- Supply chain optimization with preventive problem-solving

What makes these applications unique isn’t just their effectiveness and accessibility. Thanks to DeepSeek R1’s efficient resource usage, organizations don’t need massive computing infrastructure to harness these capabilities. A local hospital can run sophisticated diagnostic assistance tools without requiring a data center. A small research lab can access cutting-edge AI capabilities without breaking their budget.

The democratization of AI isn’t just about making the technology cheaper. It’s about making it more accessible and practical for real-world applications. DeepSeek R1 isn’t just pushing the boundaries of what AI can do – it’s expanding who can use it.

The Human Element: DeepSeek’s Team Innovation

Behind every groundbreaking technology lies a story about people – their dreams, determination, and willingness to challenge conventional wisdom. When most tech giants were competing to hire seasoned veterans, DeepSeek took a different path, assembling a team that might have raised eyebrows in Silicon Valley: recent graduates, many in their mid-twenties, from diverse academic backgrounds ranging from theoretical physics to philosophy.

“The conventional path would have been to hire established AI researchers,” one team leader reflected. “But we weren’t looking to build conventional AI. We needed fresh eyes, minds that weren’t constrained by ‘the way things are usually done.'”

This strategy proved revolutionary. The team’s diverse academic backgrounds brought unique perspectives to AI development:

- Physicists contributed insights into efficient computational methods

- Mathematicians brought novel approaches to problem-solving

- Philosophers helped shape the model’s reasoning frameworks

- Computer scientists tied everything together with practical implementation

The team adopted what they playfully called the “Why Not?” approach. When someone proposed an unconventional idea, instead of listing reasons why it wouldn’t work, the first response was always, “Why not? Let’s think about how we could make that work.” This culture led to several breakthrough moments, including the birth of Group Relative Policy Optimization (GRPO).

This combination of fresh perspectives and psychological safety created an environment where innovation flourished. The result wasn’t just better AI but a better way of building AI.

Global Implications: Reshaping the AI Landscape

Remember when people said you needed billions of dollars to compete in cutting-edge AI development? DeepSeek R1 didn’t just challenge that assumption – it shattered it completely. Think of it as someone discovering you can build a luxury car in your garage for the price of a motorcycle. That’s exactly what’s happening in the AI world right now.

The impact is rippling through the industry in two major ways:

Investment Revolution:

- Venture capitalists are actively seeking teams that can do more with less

- Major tech companies are shifting focus from computing power to efficiency

- The “bigger is better” mentality is giving way to “smarter is better.”

- Investment criteria now prioritize innovative approaches over massive funding

Democratization of AI:

- Universities running advanced AI research without massive grants

- Small labs contributing meaningful innovations to the field

- Startups developing AI solutions without enormous capital

- Healthcare facilities in developing regions implementing advanced AI systems

- Small research teams accessing powerful AI tools for climate modeling and drug discovery

What we’re seeing isn’t just a technical revolution. It’s a democratization of innovation itself. When you reduce the cost of failure, you multiply the opportunities for success.

Perhaps most significantly, DeepSeek’s approach is inspiring a new generation of innovators. “If DeepSeek could do it, why can’t we?” has become a rallying cry for innovative teams worldwide. This isn’t just about access to technology – it’s about democratizing possibility itself. When you remove resource constraints as the primary barrier to innovation, you open the door to a world where the best ideas can win, regardless of where they come from.

What’s Next: The Future of Efficient AI Development

If there’s one thing the DeepSeek R1 story has taught us, the future rarely follows the path we expect. As we stand at this turning point in AI development, the horizon ahead looks remarkably different from what anyone predicted. Remember when we thought the future of AI belonged exclusively to tech giants? DeepSeek didn’t just prove that assumption wrong – they’ve opened up entirely new possibilities.

The road ahead is taking shape in fascinating ways:

Evolution of Learning:

- DeepSeek’s reinforcement learning strategy is inspiring a wave of innovation

- Teams worldwide are exploring new variations of GRPO

- The focus is shifting from raw power to elegant efficiency

- Companies are asking, “How can we make better use of what we have?”

Industry Adaptation:

- Traditional tech giants are revising their AI development strategies

- Emerging players are leveraging DeepSeek’s innovations

- Small teams are pushing boundaries in unexpected ways

- Cross-disciplinary approaches are gaining traction

Looking ahead, we can expect:

- More breakthrough innovations from unexpected sources

- Increased focus on practical applications

- Growing emphasis on sustainable AI development

- Continued democratization of AI technology

The future of AI isn’t about building bigger machines – it’s about building smarter ones. DeepSeek showed us that the path to artificial intelligence might be more like tending a garden than building a factory.

The story of DeepSeek R1 isn’t just about what one company achieved – it’s about what they proved was possible. The future of AI belongs not to those with the most enormous budgets but to those with the boldest ideas and the courage to pursue them.

7 most prominent citations for the article:

- NBC News (Data Analysis) https://www.nbcnews.com/data-graphics/deepseek-ai-comparison-openai-chatgpt-google-gemini-meta-llama-rcna189568

- Time Magazine (Industry Impact) https://time.com/7210296/chinese-ai-company-deepseek-stuns-american-ai-industry/

- Al Jazeera (Economic Analysis) https://www.aljazeera.com/economy/2025/1/28/why-chinas-ai-startup-deepseek-is-sending-shockwaves-through-global-tech

- Sydney University (Academic Research) https://www.sydney.edu.au/news-opinion/news/2025/01/29/deepseek-ai-china-us-tech.html

- ArXiv (Technical Paper) https://arxiv.org/html/2501.12948v1

- Indian Express (Company Origins) https://indianexpress.com/article/technology/artificial-intelligence/how-deepseeks-origins-explain-its-ai-models-overtaking-us-rivals-like-chatgpt-9802415/

- Business Standard (Technical Analysis) https://www.business-standard.com/world-news/deepseek-r1-chinese-ai-research-breakthrough-challenging-openai-explained-125012700327_1.html

You’re in the right place for those searching for deeper insights and broader perspectives. Explore our curated articles here:

- The Sunlit Path: Can Solar Energy Replace Fossil Fuels?

- The Future of Virtual Reality and the Role of the Metaverse

- The Economic Powerhouse in Your Pocket: How Smartphones Fuel the Economy

- The Curse of Talent: Why Artists Often Grapple with Depression

- Revolutionizing Rhythms Through AI and Web 3.0